Imagine standing in a crowded marketplace, looking for a shop that sells the best coffee. You might ask the people closest to you for recommendations—trusting that those nearby share similar tastes or experiences. The K-Nearest Neighbours (K-NN) algorithm operates on a similar principle: it makes predictions based on the “neighbours” closest to a data point, using distance as the measure of similarity.

In the vast world of machine learning, K-NN is like an intuitive, people-driven approach—simple, yet powerful when wielded with understanding and care.

Understanding the Essence of K-NN

K-NN is a lazy learner. Instead of building an elaborate model beforehand, it waits until a query arises, then makes a decision based on proximity to existing data. It’s like a student who doesn’t memorise the entire syllabus but knows exactly where to look in the textbook when a question appears.

This approach allows K-NN to be flexible and adaptive. The number of neighbours, K, and the choice of distance metric determine how sensitive or generalised the model becomes. For example, a smaller K focuses on immediate neighbours, while a larger K smooths out fluctuations by considering a broader range of examples.

Professionals starting their journey into analytics often explore this technique while pursuing a data scientist course, gaining hands-on experience with algorithms that balance simplicity and interpretability.

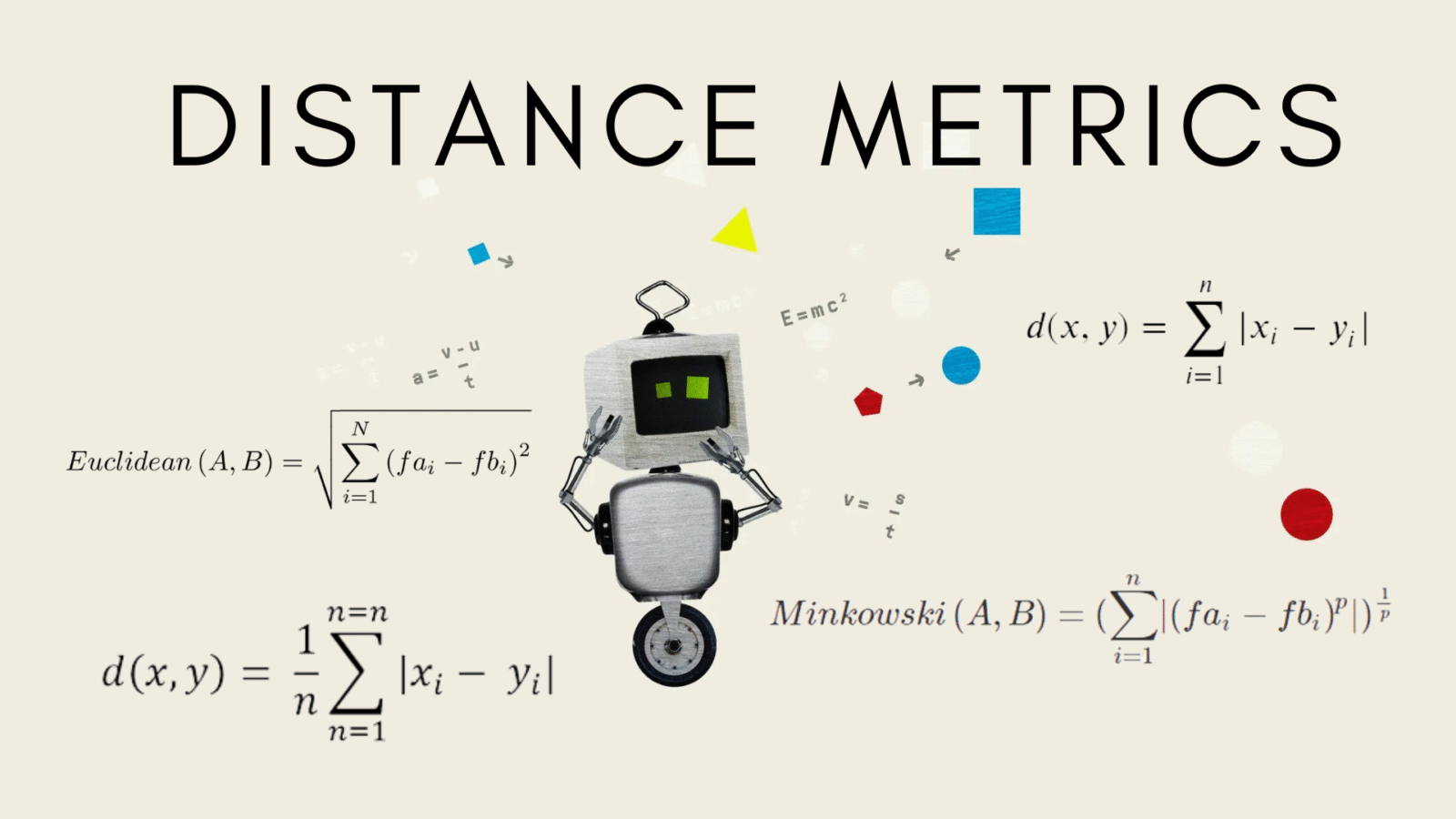

Distance Metrics: The Compass of K-NN

Imagine walking through a city using different types of maps—each one distorting distance in unique ways. The choice of distance metric in K-NN has a similar effect. It defines how the algorithm perceives proximity between data points.

- Euclidean distance works like straight-line walking—it’s the shortest path between two points.

- Manhattan distance measures the sum of horizontal and vertical paths, resembling movement along a city grid.

- Minkowski or cosine distance adapts to more complex terrains, capturing relationships in high-dimensional spaces.

The selection of the right metric isn’t just a technical detail—it can reshape the algorithm’s understanding of data structure entirely. In real-world applications, analysts must experiment to find which “map” best represents their domain.

Learners enrolled in a data science course in Mumbai often explore these metrics practically, discovering how subtle shifts in distance measures can drastically alter model performance.

Local Averaging: Learning from the Neighbours

Once K-NN identifies the nearest data points, it looks for consensus. For regression tasks, it averages the neighbours’ values; for classification, it relies on a majority vote. It’s the equivalent of consulting friends before making a decision—the more reliable their opinions, the better the outcome.

However, this local averaging has nuances. The contribution of each neighbour can be weighted by distance, meaning closer points have more influence. This prevents faraway outliers from skewing predictions and ensures the algorithm stays faithful to its local context.

K-NN’s strength lies in its transparency—every prediction can be traced back to the actual data points that influenced it. In an era dominated by black-box models, this interpretability makes K-NN particularly valuable for fields where explanations matter as much as accuracy.

Challenges in Applying K-NN

Despite its appeal, K-NN isn’t without limitations. It can be computationally expensive for large datasets since it must calculate distances to every point during prediction. Additionally, it struggles with irrelevant features or skewed data distributions.

Feature scaling, normalisation, and dimensionality reduction are critical to maintaining fairness in distance calculations. Without these, certain variables might overpower others, leading to misleading results.

This is where structured learning programs, such as a data scientist course, equip professionals with the tools to preprocess and fine-tune data effectively before applying algorithms like K-NN.

Practical Applications of K-NN

From recommending movies to detecting anomalies in financial transactions, K-NN’s versatility shines across industries. In healthcare, it helps diagnose diseases by comparing patient symptoms to historical data. In marketing, it segments customers based on similarity in purchasing behaviour.

Students and professionals in a data science course in Mumbai often encounter such real-world projects, learning how to balance data volume, computational efficiency, and interpretability in deploying K-NN-based solutions.

Conclusion

The K-Nearest Neighbours algorithm exemplifies a fundamental truth in data science: that understanding proximity—how things relate and cluster—is often the key to prediction. It blends human intuition with mathematical precision, relying on the wisdom of nearby examples to guide decisions.

K-NN may not build complex internal models, but it reminds us that sometimes, simplicity guided by context can be remarkably effective. Whether used in early analytics exploration or advanced model prototyping, it remains a cornerstone in the analyst’s toolkit—teaching us that every data point exists within a meaningful neighbourhood, waiting to be understood.

Business name: ExcelR- Data Science, Data Analytics, Business Analytics Course Training Mumbai

Address: 304, 3rd Floor, Pratibha Building. Three Petrol pump, Lal Bahadur Shastri Rd, opposite Manas Tower, Pakhdi, Thane West, Thane, Maharashtra 400602

Phone: 09108238354

Email: enquiry@excelr.com